Detection and Mitigation of Abusive Content

One of the areas that Artificial Intelligence is influencing is online moderation of platforms for abusive content, which it identifies and limits through filtration. Large social media outlets use AI-based algorithms to scan millions of posts - daily. For example, Facebook reveals that over 94% of the hate speech they removed from the platform last year was found by their AI systems before anyone actually reported it. The large datasets used to train these AI systems are filled with past instances of abuse, allowing the systems to also identify abusive patterns in user content.

Real-time Prevention and User Protection

AI techniques are not just responsive however are ending up being proactive. Other sites provide real-time feedback powered by AI to warn users about their language choices before their comments are published, which could be perceived as harmful. For instance, Instagram unveiled a tool that will warn users if their comments may be considered offensive prior to posting the comments. This practice anecdotally has reduced the instance of abusive comments by urging users to modify their posts before hitting "send".

Improving user report mechanisms

By using AI to improve user reporting systems, it assists with report prioritization to make sure that the most critical reports are attended to first. AI algorithms quickly route urgent cases to human moderators, assessing the gravity and circumstances underpinning reported content faster than standard approaches. Such prioritisation to curb the rise in abuse paves the way for a safer online space.

Solving for Scale

AI generates a large amount of online content and moderating it can get overwhelming, but not for AI because it scales in ways that humans could not. Like YouTube, for instance - a platform that is fed new hours of video content, second after second, and relies on machine learning models to audit everything. YouTube removed over 6 million videos just in the first three months of 2020, about 75% of which were first spotted by AI.

Ethical and restrictions on the data availability

But, as effective as AI is in the fight against online abuse, it is not without limitation. For example, if training data is biased then the AI system may judge abuse unfairly. In order to address middle-line workers, companies are always adjusting their models and using a mix of data to train the models in a more equal manner.

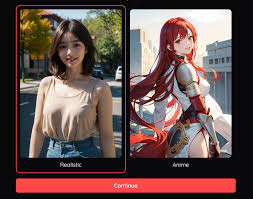

Demystifying NSFW AI to make talking to strangers a bit less riskier

Artificial intelligence is one of the best ways to combat online abuse as it matures, helping to ensure safe spaces on platforms where people can engage with others in positive, respectful ways. The need for nuanced approaches for different online interactions is also evidenced by specialized AI systems like NSFW AI that have been developed to detect NSFW content. These improvements are a good jump in the direction of building a safe and non-discriminatory online space.